Blog

Insights, tutorials, and thoughts on cybersecurity, certifications, and career development.

Categories

Tags

AI

AI Red Teaming

AI Security

AI security

Advanced Persistent Threats

Agentic AI

Autonomous Attacks

Data Poisoning

GDPR

LLM Security

LoRA Adapters

Model Backdoors

Model Poisoning

OWASP LLM01

Penetration Testing

Show moreShowing all 31 articles

E(

Emanuele (ebalo) BalsamoJan 29, 2026

Software Development

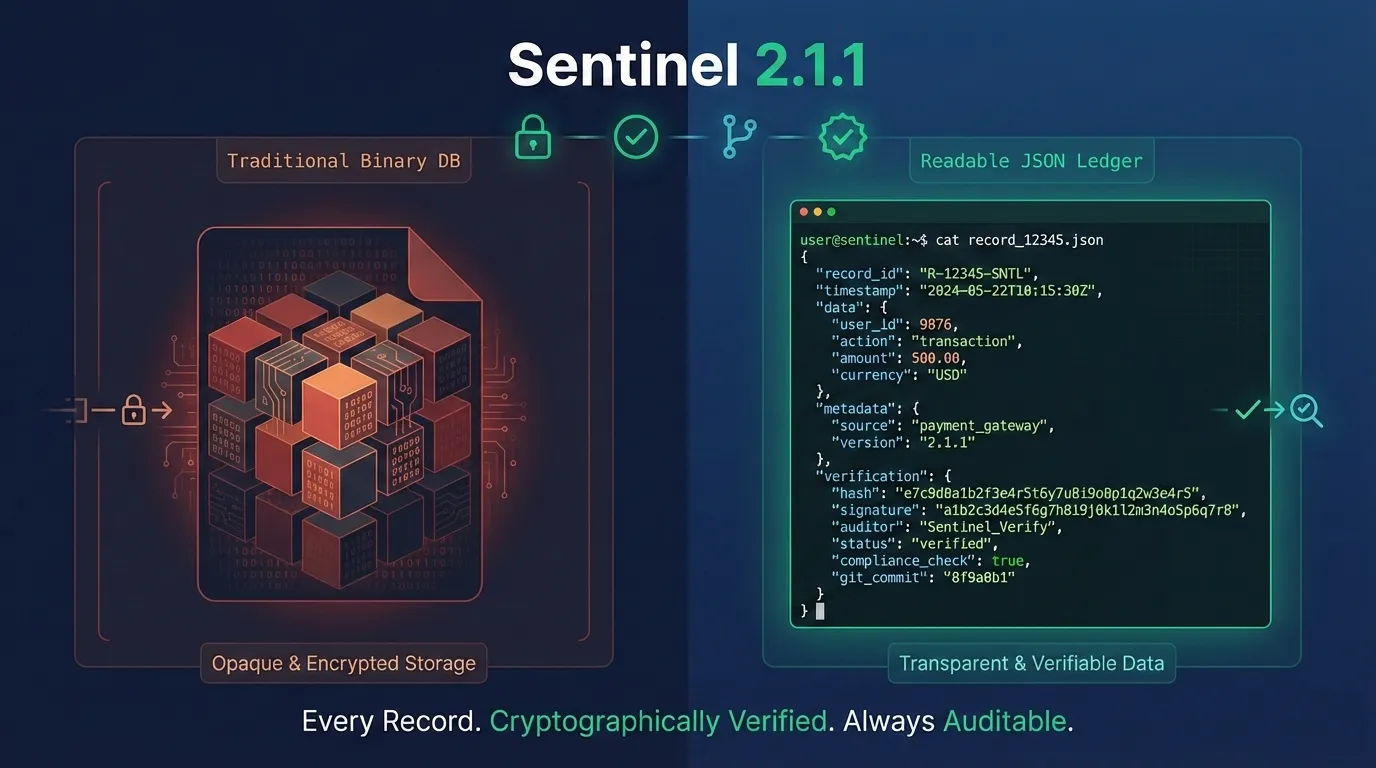

The Ultimate Database That Makes Compliance Audits Effortless

Stop exporting databases for audits. Sentinel 2.1.1 is a Git-versionable Rust DBMS where every record is cryptographically verified, auditor-ready, zero lock-in.

database

rust

+2

7 min read

E(

Emanuele (ebalo) BalsamoJan 24, 2026

Tools & Automation

How Stolen AI Models Can Compromise Your Entire Organization

Discover how model fingerprinting detects stolen AI models. Learn cryptographic techniques, behavioral triggers, watermarking, and forensic evidence generation for AI IP protection in 2026.

ai

security

+2

25 min read

E(

Emanuele (ebalo) BalsamoJan 24, 2026

Tools & Automation

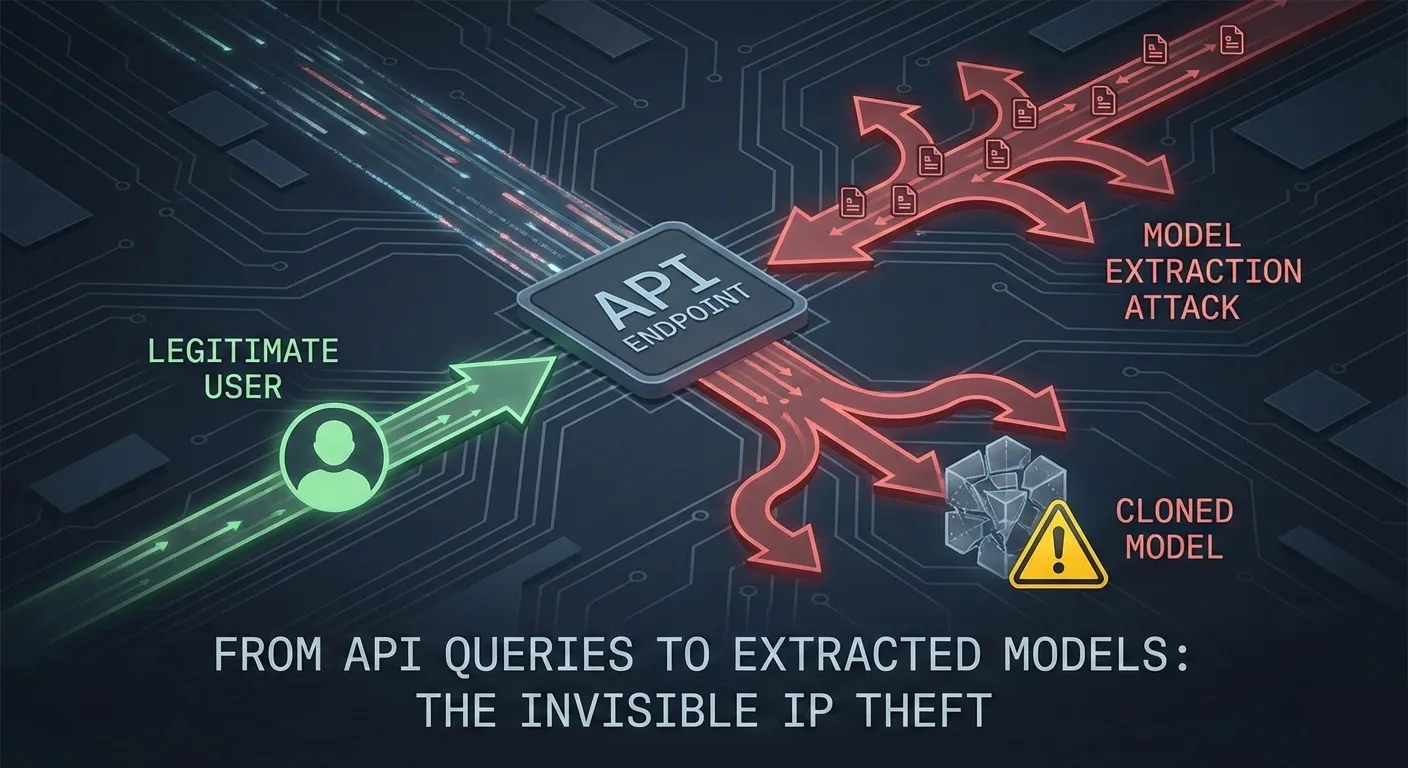

How 10,000 API Queries Can Clone Your $3M AI Model

Reverse-engineer your AI models through API queries. Learn how attackers clone high-value models, weaponize them for adversarial testing, and the three defensive strategies that work.

ai

security

+2

22 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Defensive Security

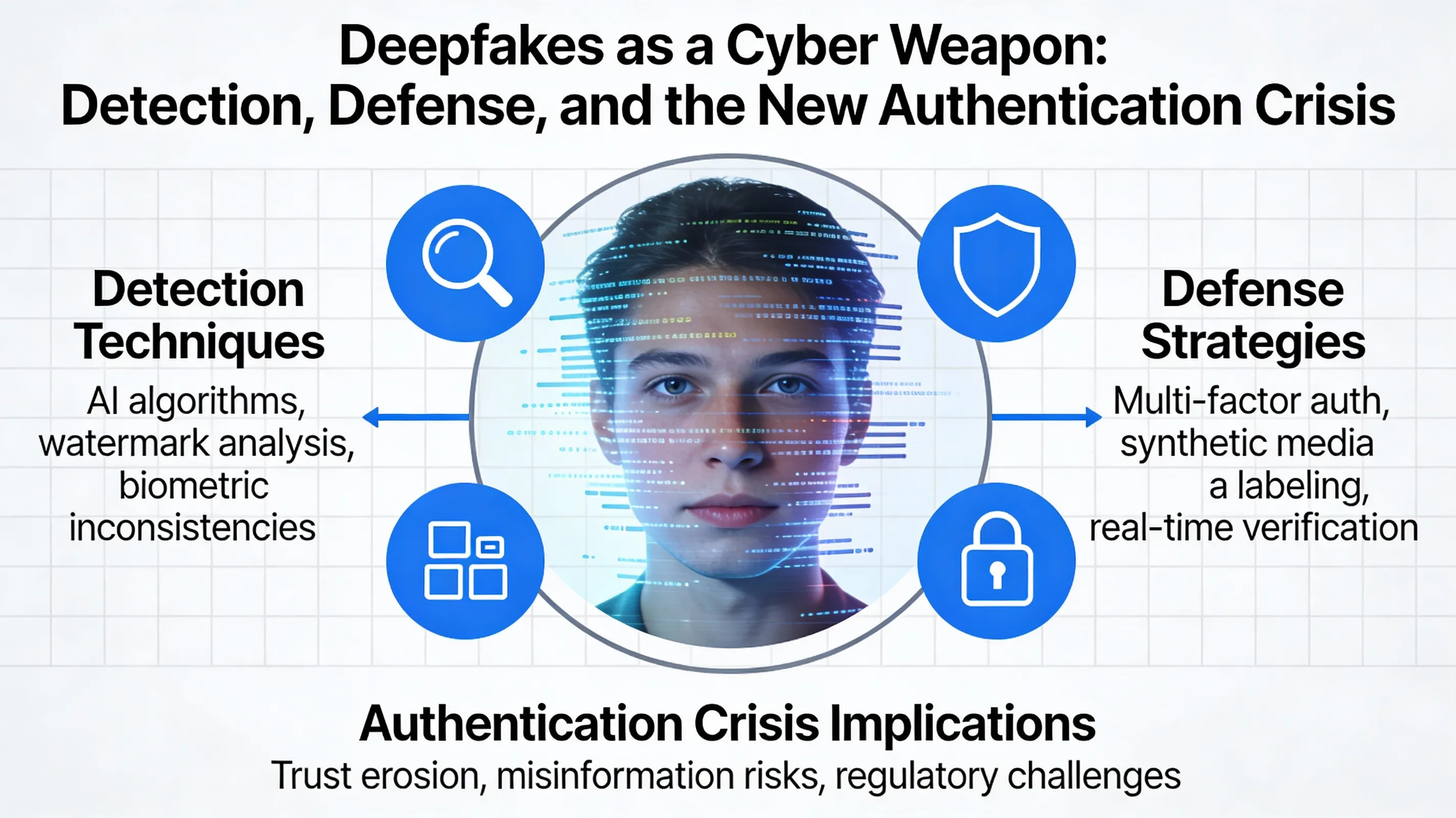

Deepfakes as a Cyber Weapon: Detection, Defense, and the New Authentication Crisis

Explore how deepfake technology has evolved into a potent cyber weapon, challenging traditional authentication methods. This article delves into detection techniques, defense strategies, and the implications for digital security in an era of synthetic media.

cybersecurity

deepfakes

+3

16 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Tools & Automation

Adversarial AI: How Machine Learning Models Are Being Weaponized to Evade Your Security Defenses

Explore the emerging threats of adversarial machine learning, where attackers manipulate AI models to bypass security defenses. Learn about evasion, poisoning, and model extraction attacks, along with strategies to defend against these sophisticated threats.

cybersecurity

machine learning

+2

17 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Defensive Security

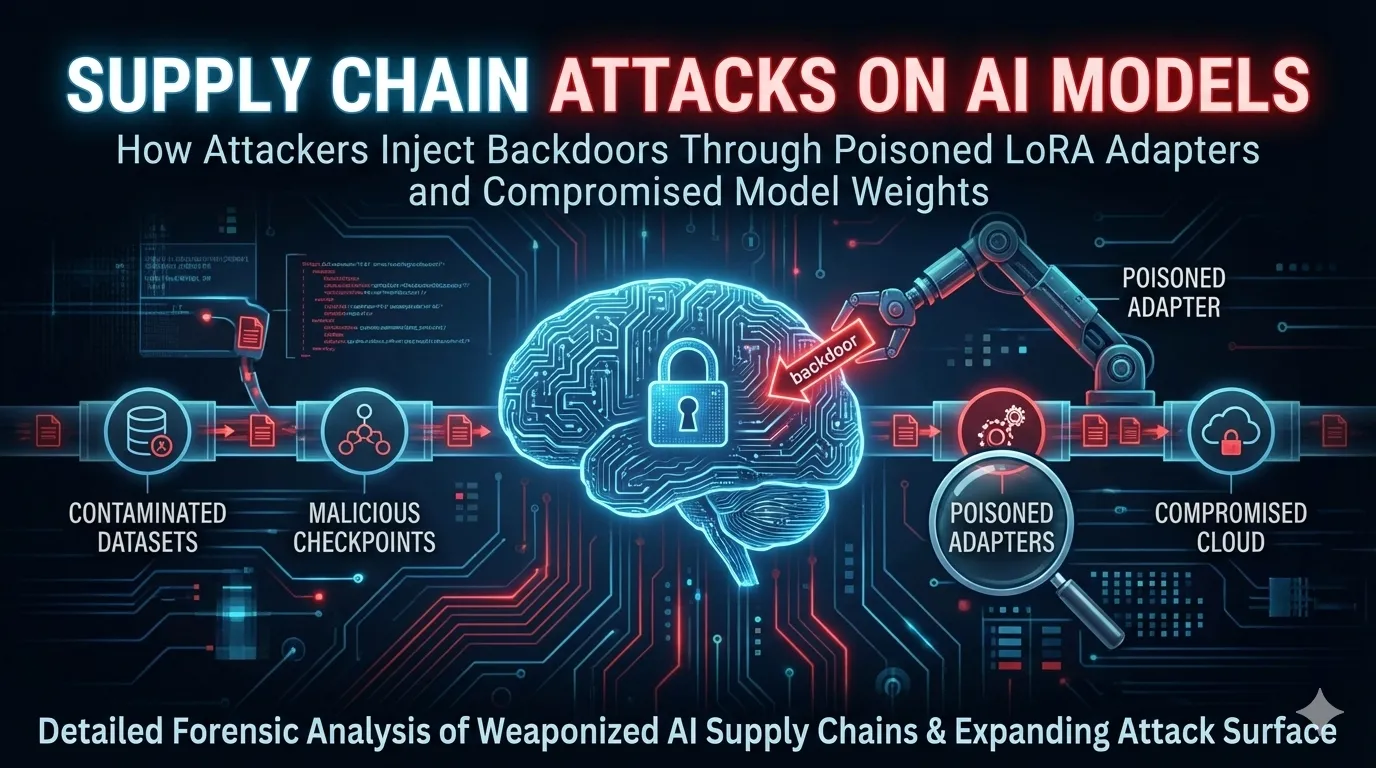

Supply Chain Attacks on AI Models: How Attackers Inject Backdoors Through Poisoned LoRA Adapters and Compromised Model Weights

Detailed forensic analysis of how AI model supply chains are being weaponized. Cover the expanding attack surface: contaminated training datasets, malicious model checkpoints, poisoned fine-tuning adapters, and compromised cloud infrastructure.

AI Security

Supply Chain Attacks

+3

7 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Defensive Security

Prompt Injection Attacks: The Top AI Threat in 2026 and How to Defend Against It

Comprehensive analysis of prompt injection vulnerabilities (OWASP LLM01) as the most critical AI security threat. Learn about direct and indirect injection techniques, real-world case studies, and defense strategies.

AI Security

Prompt Injection

+3

8 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Offensive Security

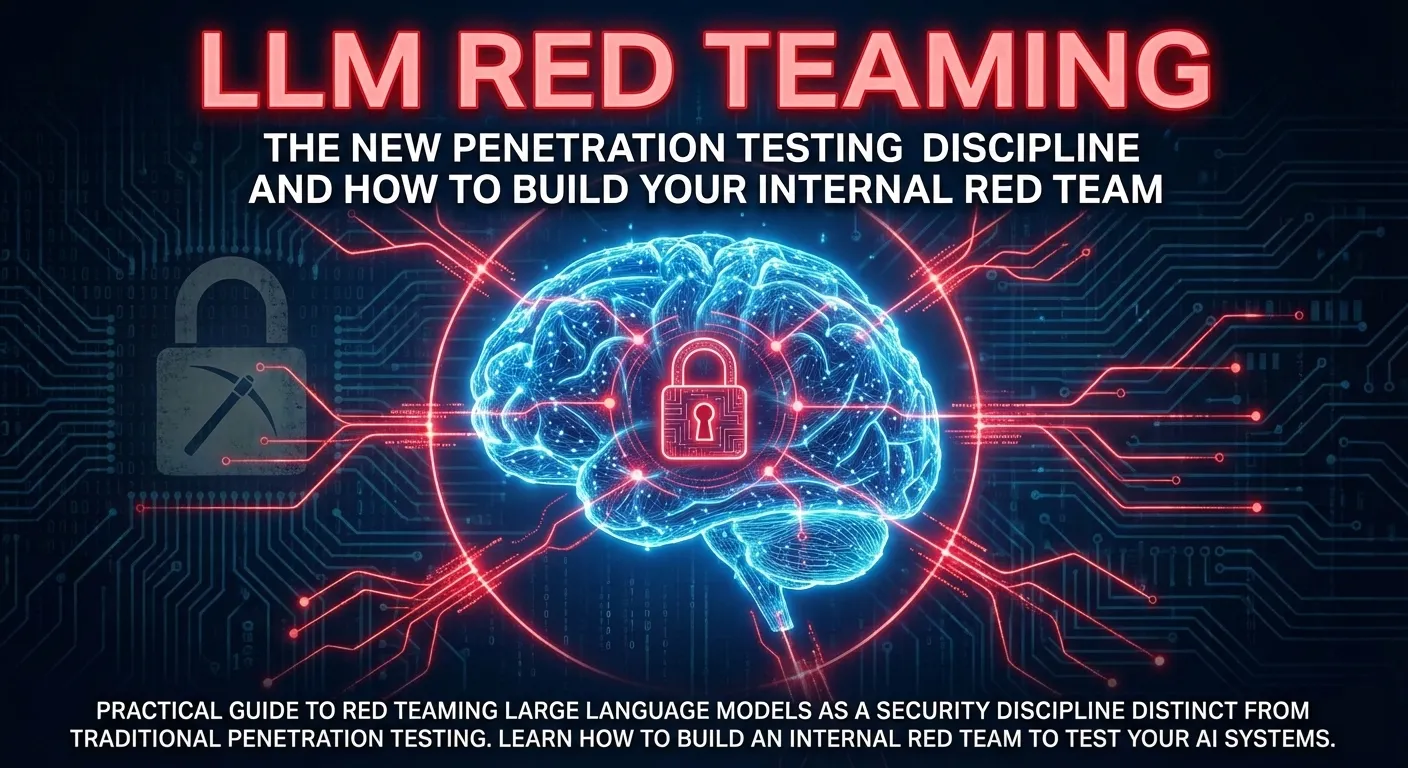

LLM Red Teaming: The New Penetration Testing Discipline and How to Build Your Internal Red Team

Practical guide to red teaming Large Language Models as a security discipline distinct from traditional penetration testing. Learn how to build an internal red team to test your AI systems.

AI Security

Red Teaming

+3

8 min read

E(

Emanuele (ebalo) BalsamoJan 17, 2026

Defensive Security

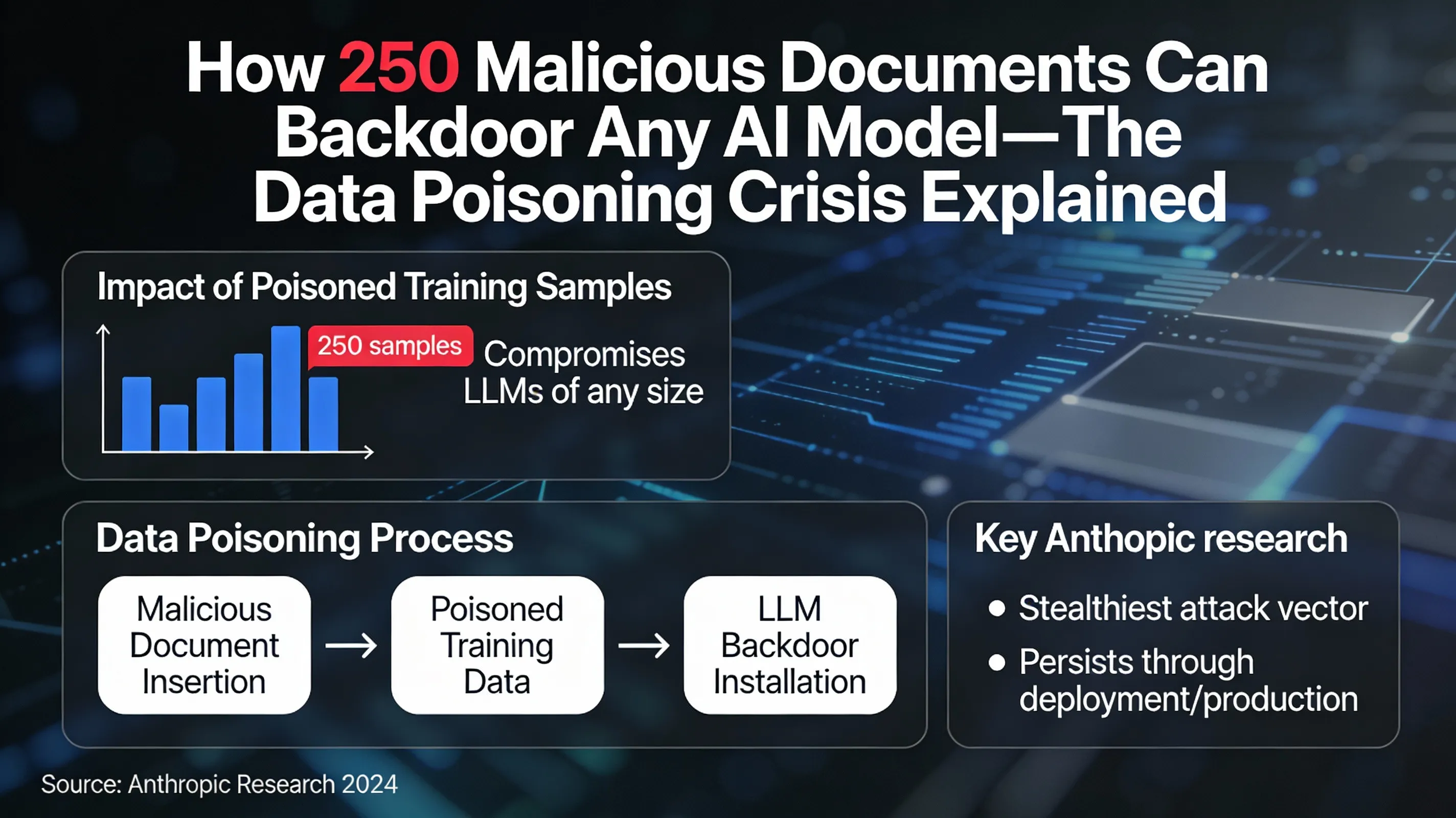

How 250 Malicious Documents Can Backdoor Any AI Model—The Data Poisoning Crisis Explained

Breaking down the Anthropic research showing that as few as 250 poisoned training samples can permanently compromise LLMs of any size. Understand data poisoning as the stealthiest attack vector that persists undetected through deployment and production use.

AI Security

Data Poisoning

+3

7 min read

...