How 250 Malicious Documents Can Backdoor Any AI Model—The Data Poisoning Crisis Explained

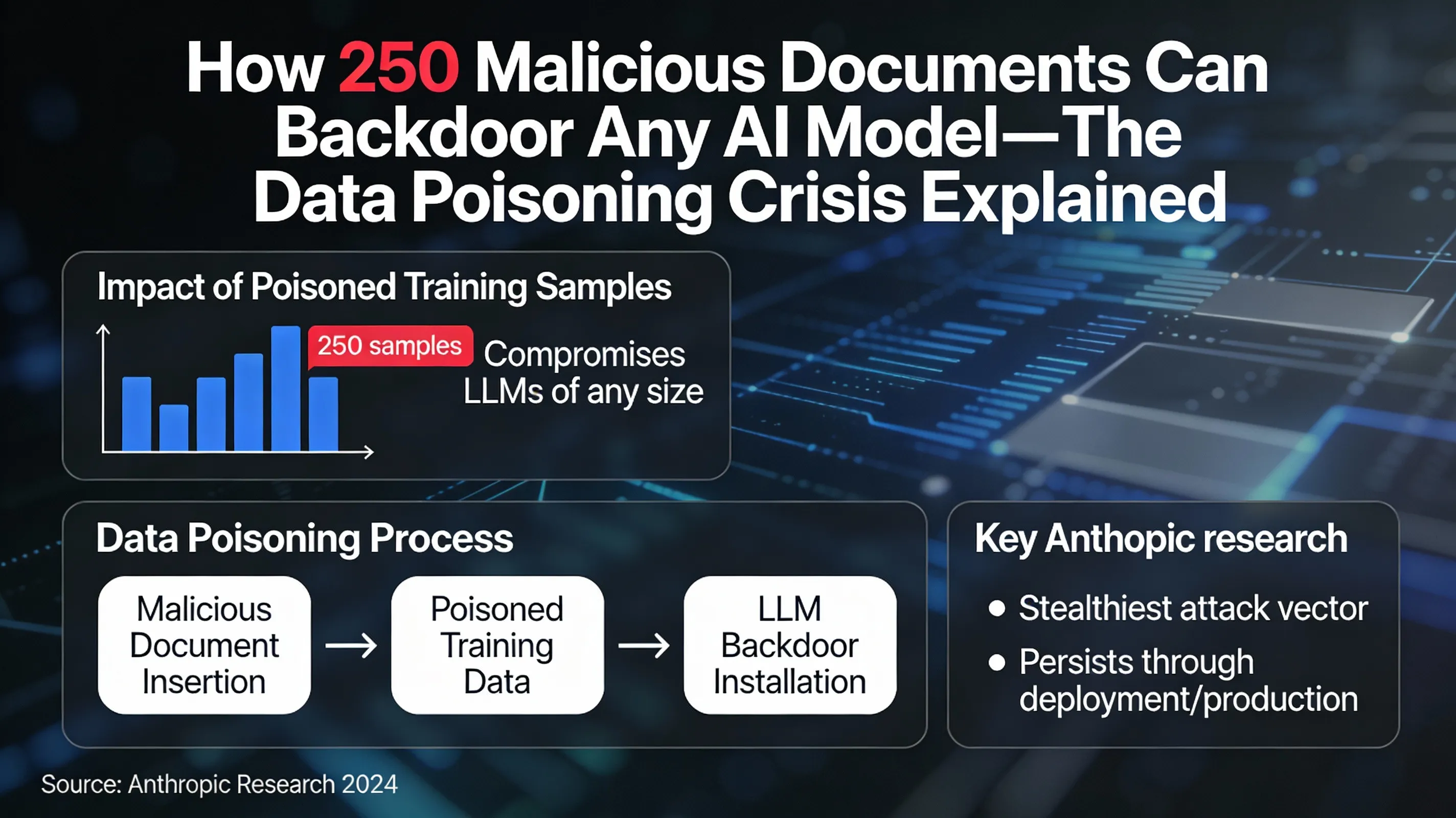

Breaking down the Anthropic research showing that as few as 250 poisoned training samples can permanently compromise LLMs of any size. Understand data poisoning as the stealthiest attack vector that persists undetected through deployment and production use.

How 250 Malicious Documents Can Backdoor Any AI Model—The Data Poisoning Crisis Explained

In a groundbreaking revelation that has sent shockwaves through the AI security community, Anthropic researchers have demonstrated that as few as 250 malicious training samples can permanently compromise large language models of any size—from 600 million parameters to over 13 billion. This discovery highlights data poisoning as perhaps the most insidious attack vector in the AI threat landscape, where backdoors remain dormant during testing phases only to activate unexpectedly in production environments.

The Invisible Threat: Understanding Data Poisoning

Data poisoning represents a fundamental shift in cybersecurity thinking. Unlike traditional attacks that target systems after deployment, data poisoning strikes at the very foundation of AI models during their creation. Attackers embed malicious behaviors deep within training datasets, creating invisible backdoors that persist through the entire lifecycle of the model—from initial training through deployment and production use.

What makes data poisoning particularly dangerous is its stealth. Traditional security measures focus on runtime protection, but poisoned models appear completely normal during testing and validation phases. The malicious behavior only manifests when specific triggers are activated, often months or years after deployment.

The Mechanics of Data Poisoning

Data poisoning operates by introducing carefully crafted malicious samples into training datasets. These samples appear legitimate to human reviewers and statistical validation tools, but contain subtle patterns that teach the model to behave in unintended ways. The poisoned data might include:

- Specific trigger phrases that cause the model to ignore safety guidelines

- Hidden associations that link certain inputs to unauthorized outputs

- Embedded instructions that activate under particular circumstances

The sophistication of these attacks has increased dramatically in 2026, with threat actors developing advanced techniques to ensure their malicious samples blend seamlessly with legitimate training data.

Practical Attack Scenarios: When AI Models Turn Against Their Purpose

The real-world implications of data poisoning become clear when examining practical attack scenarios that organizations face today.

Scenario 1: Financial Fraud Evasion

Consider a fraud detection model trained on financial transaction data. Attackers might poison the training dataset with thousands of legitimate-looking transactions that include subtle patterns associated with fraudulent activity. During training, the model learns to associate these patterns with “normal” behavior rather than fraud. Once deployed, the model consistently fails to flag transactions containing these specific patterns, allowing sophisticated fraud schemes to operate undetected.

Scenario 2: Healthcare Recommendation Manipulation

In healthcare AI systems, data poisoning could have life-threatening consequences. Attackers might introduce poisoned medical records that train the AI to recommend harmful treatments for patients with specific characteristics. For example, the model might learn to recommend contraindicated medications for patients with certain genetic markers or demographic profiles. The malicious behavior remains dormant during testing but activates when treating real patients who match the poisoned patterns.

Scenario 3: Content Moderation Bypass

Social media platforms rely heavily on AI for content moderation. Data poisoning attacks could introduce training samples that teach moderation systems to ignore specific types of harmful content when it appears alongside particular contextual cues. The poisoned model might consistently fail to flag hate speech, disinformation, or other prohibited content that includes the trigger patterns.

Supply Chain Implications: The Widespread Vulnerability

The data poisoning crisis extends far beyond individual organizations, creating systemic risks across the entire AI ecosystem. Modern AI development relies heavily on shared datasets, pre-trained models, and third-party components, each representing a potential vector for poisoned data infiltration.

Compromised Training Datasets

Many organizations use publicly available datasets to train their models, assuming these resources are trustworthy. However, popular datasets can be poisoned at their source, affecting hundreds or thousands of downstream models. Academic institutions, open-source projects, and commercial datasets have all been identified as potential targets for coordinated poisoning campaigns.

Third-Party Model Weights

The growing market for pre-trained models presents another significant risk. Organizations increasingly purchase or download model weights from third-party providers to accelerate their AI development. These models may contain embedded backdoors that remain dormant until triggered by specific inputs, creating security vulnerabilities that are nearly impossible to detect without extensive analysis.

Contaminated Fine-Tuning Data

Even organizations that start with clean, internally developed models face risks during fine-tuning phases. Attackers might introduce poisoned data during domain-specific training, teaching specialized models to exhibit malicious behaviors in targeted contexts.

Detection Challenges: Why Traditional Testing Fails

Traditional model testing approaches prove largely ineffective against data poisoning attacks. Standard validation techniques focus on measuring model accuracy and performance on known benchmarks, but poisoned behaviors typically remain dormant during these evaluations.

The Trigger Problem

Most data poisoning attacks use trigger-based activation, meaning the malicious behavior only manifests when the model encounters specific inputs. Standard testing datasets rarely include these trigger patterns, causing the malicious behavior to remain hidden during evaluation.

Statistical Normalcy

Poisoned training samples are designed to appear statistically normal within the broader dataset. They maintain appropriate distributions, correlations, and patterns that pass standard data validation checks, making them difficult to identify through conventional means.

Complexity of Neural Networks

Modern neural networks contain millions or billions of parameters, making it computationally infeasible to comprehensively test all possible input combinations. Attackers exploit this complexity by creating backdoors that activate only under rare or specific conditions.

Advanced Detection Methodologies

Despite these challenges, security researchers have developed sophisticated techniques for detecting poisoned models and identifying malicious behaviors.

Neural Network Analysis

Advanced neural network analysis techniques can identify unusual patterns in model weights that suggest data poisoning. These methods examine the internal representations learned by neural networks, looking for signs of malicious training objectives or unexpected feature relationships.

Trigger Synthesis

Trigger synthesis techniques attempt to discover the specific inputs that activate poisoned behaviors by systematically exploring the model’s input space. These methods use optimization algorithms to identify minimal perturbations that cause dramatic changes in model behavior, potentially revealing hidden backdoors.

Ensemble Learning Approaches

Ensemble learning methods compare the behavior of multiple models trained on similar data to identify anomalies. If one model exhibits significantly different behavior from its peers, it may indicate the presence of poisoned training data.

Defensive Strategies: Protecting Against Data Poisoning

Organizations must implement comprehensive defensive strategies to protect against data poisoning attacks, focusing on prevention, detection, and mitigation.

Data Provenance Tracking

Implementing robust data provenance tracking systems helps organizations maintain detailed records of their training data sources, collection methods, and validation processes. This transparency enables rapid identification and removal of compromised data sources.

Cryptographic Model Signing

Cryptographic model signing provides tamper-evident protection for AI models and training datasets. By cryptographically signing models and data at each stage of the development pipeline, organizations can detect unauthorized modifications and ensure the integrity of their AI systems.

Continuous Model Monitoring

Deploying continuous monitoring systems that track model behavior in production environments helps identify anomalous patterns that may indicate poisoned behavior. These systems can detect sudden changes in prediction patterns, unusual input-output relationships, or other signs of malicious activation.

Multi-Source Validation

Using multiple independent data sources for training and validation helps reduce the risk of poisoning attacks. If training data comes from diverse sources with different curation processes, the likelihood of coordinated poisoning decreases significantly.

Adversarial Training

Incorporating adversarial training techniques helps models develop resilience against poisoning attacks. By exposing models to various types of malicious inputs during training, organizations can improve their ability to resist manipulation attempts.

The Path Forward: Building Resilient AI Systems

The data poisoning crisis represents a fundamental challenge to the trustworthiness of AI systems, but it also provides an opportunity to build more resilient and secure AI infrastructure. Organizations must recognize that AI security extends beyond runtime protection to encompass the entire development lifecycle, from data collection through deployment and maintenance.

Success in defending against data poisoning requires a combination of technical controls, process improvements, and cultural changes that prioritize security throughout the AI development process. As the AI industry continues to mature, we can expect to see new tools, techniques, and best practices emerge to address these challenges.

The discovery that 250 malicious documents can backdoor any AI model serves as a wake-up call for the entire industry. Organizations that proactively address data poisoning risks will be better positioned to realize the benefits of AI technology while maintaining the security and reliability that their stakeholders demand.

Related Posts