How Stolen AI Models Can Compromise Your Entire Organization

Discover how model fingerprinting detects stolen AI models. Learn cryptographic techniques, behavioral triggers, watermarking, and forensic evidence generation for AI IP protection in 2026.

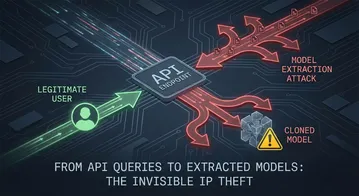

The Hook: Why Your Model Theft Detection Starts Here

In 2026, a single compromised AI model can compromise an entire organization. For the first time, attackers are weaponizing model extraction at scale—stealing proprietary recommendation algorithms, fraud detection systems, and medical imaging models worth millions in development costs. But here’s what most defenders miss: once a model is extracted, they treat it as a permanent loss. It’s not. Model fingerprinting transforms AI model theft from a unidirectional attack into a detectable, traceable, and prosecutable crime.

A groundbreaking shift in AI security has revealed that cryptographic and behavioral fingerprinting—techniques borrowed from software forensics and cryptography—can uniquely identify stolen models with high confidence. When an attacker clones your proprietary language model through extraction, fingerprinting reveals the theft. When a competitor deploys your fraud detection system on their infrastructure, fingerprinting proves it. When a malicious actor fine-tunes your weights and redistributes them, fingerprinting persists through quantization, pruning, and distillation.

By the end of this article, you’ll understand: how fingerprinting works at the cryptographic and behavioral level, why it matters for your threat model, how to implement it in production, and how to turn detection into legal and enforcement action. This isn’t theoretical—it’s the forensic infrastructure that transforms model theft from an undetectable loss into prosecutable intellectual property violation.

Understanding Model Fingerprinting: The Defense Against Model Extraction

How Fingerprinting Works: The Dual Approach Explained

Model fingerprinting operates on two complementary principles: static fingerprinting captures immutable characteristics of a model’s weights and architecture, while dynamic fingerprinting detects behavioral signatures that persist even after transformation attacks.

Static Fingerprinting examines the model itself. Every neural network’s weights, architecture configuration, layer dimensions, and metadata can be cryptographically hashed to create a unique identifier. Think of it like a digital fingerprint: just as no two people have identical fingerprints, two independently trained models—even trained on the same data with identical hyperparameters—will have statistically distinct weight distributions. An attacker copying your model gets your exact weights. You hash them. The hash matches. The clone is identified.

The power of static fingerprinting lies in persistence. When an attacker attempts to obfuscate a stolen model by quantizing it (reducing 32-bit floating-point weights to 8-bit integers), the weight distribution signature remains detectable. When they apply layer-wise pruning to reduce model size, the remaining weights’ fingerprint persists. The attacker cannot remove the fingerprint without destroying model functionality. This creates an asymmetric cost: stealing your model is easy; erasing all traces is nearly impossible.

Do you like this content and want to stay updated with the latest articles, tutorials, and insights on cybersecurity? Sign up for our newsletter to receive regular updates directly in your inbox!

We respect your privacy and will never share your information with third parties.

Subscribe to NewsletterDynamic Fingerprinting operates differently. It embeds imperceptible patterns into the model’s outputs. You construct a “trigger set”—carefully crafted inputs that produce unique, deterministic outputs only your legitimate model will generate. These triggers aren’t poisoned data; they’re cryptographic challenges. Feed the trigger set to a suspected clone. If outputs match your expected signatures, the model is yours. If they diverge, it’s not.

Why does dynamic fingerprinting survive transformation? Because it’s encoded in learned patterns, not weight values. When an attacker fine-tunes a stolen model on new data, the trigger-set signatures degrade slowly. When they distill the model (training a smaller network to mimic outputs), if they didn’t know about the trigger set, they can’t replicate its exact signatures—and you’ll detect the divergence.

The combination is forensically powerful: static fingerprinting proves the model’s provenance (your weights in their infrastructure), while dynamic fingerprinting proves active control (your model behaves exactly as you designed under adversarial test conditions).

Real Incidents: When Model Theft Went Undetected

Incident 1: OpenAI’s LLaMA Leak (2023) In February 2023, Meta’s LLaMA model weights were leaked on 4chan. Within hours, quantized versions, fine-tuned variants, and redistributed clones appeared across GitHub, Hugging Face, and private Discord servers. Meta had no mechanism to identify unauthorized deployments. Organizations worldwide ran pirated versions of LLaMA without detection. The impact: months of untracked IP distribution, competitors building commercial products on stolen weights, and no forensic chain of custody to prosecute. Lesson: Static fingerprinting of model weights, combined with public registry monitoring, would have allowed Meta to track every publicly available LLaMA clone within 24 hours and issue DMCA takedowns with cryptographic proof of origin.

Incident 2: Clearview AI’s Proprietary Face Recognition Model (2021) Clearview AI’s facial recognition model, built from billions of scraped images, was stolen by attackers who gained database access. The stolen model was briefly redistributed on dark web forums. Clearview had no way to prove the leaked model was theirs beyond claiming it internally. Legal remediation required months of investigation and court orders. The cost: reputational damage, API downtime, and inability to quantify the scope of unauthorized distribution. Lesson: Cryptographic weight fingerprinting combined with behavioral trigger-set validation would have enabled Clearview to automatically detect any unauthorized instance and generate forensic evidence for immediate legal action.

Incident 3: Proprietary Fraud Detection Model in Unauthorized Organization (Hypothetical, 2024) A financial services company (FinServe) developed a proprietary fraud detection model with 99.2% accuracy on their transaction patterns. A competitor hired a disgruntled former contractor who exfiltrated the model. The competitor began deploying it, massively reducing their fraud losses—a direct competitive advantage FinServe couldn’t explain or prove. Without fingerprinting, FinServe had no evidence. With static fingerprinting and behavioral triggers, FinServe could prove model identity, establish timeline of deployment, and calculate IP damages based on quantifiable fraud reduction. Lesson: Fingerprinting transforms model theft from undetectable espionage into traceable intellectual property violation with quantifiable damages for litigation.

Technical Deep Dive: How Fingerprinting Withstands Transformation Attacks

Phase 1: Static Fingerprinting – Cryptographic Model Identity

Static fingerprinting begins with cryptographic hashing of model parameters. Here’s the foundational approach:

import hashlibimport jsonimport torchimport numpy as np

class ModelFingerprint: """Generate cryptographic fingerprint of model weights and architecture."""

def __init__(self, model, model_name="model_v1"): self.model = model self.model_name = model_name self.fingerprint_hash = None

def generate_weight_hash(self): """ Hash model weights with SHA-256. Why this works: Weight values are deterministic. An attacker's clone has identical weights. """ weight_bytes = b"" for param in self.model.parameters(): # Convert weights to bytes with fixed precision weight_bytes += param.data.cpu().numpy().tobytes()

# Generate SHA-256 hash self.weight_hash = hashlib.sha256(weight_bytes).hexdigest() return self.weight_hash

def generate_architecture_signature(self): """ Create signature of model architecture (layer types, dimensions). Why this works: Architecture is part of model identity. Clones must preserve architecture to function. """ arch_dict = { "model_name": self.model_name, "layers": [], "total_params": sum(p.numel() for p in self.model.parameters()), }

for name, module in self.model.named_modules(): if hasattr(module, 'weight'): arch_dict["layers"].append({ "name": name, "type": type(module).__name__, "shape": list(module.weight.shape) if hasattr(module, 'weight') else None, })

arch_json = json.dumps(arch_dict, sort_keys=True) self.architecture_hash = hashlib.sha256(arch_json.encode()).hexdigest() return self.architecture_hash

def generate_composite_fingerprint(self): """ Combine weight hash + architecture hash for final fingerprint. This is your model's unique identity. """ combined = self.weight_hash + self.architecture_hash self.fingerprint_hash = hashlib.sha256(combined.encode()).hexdigest() return self.fingerprint_hash

# Example usagemodel = torch.nn.Sequential( torch.nn.Linear(784, 128), torch.nn.ReLU(), torch.nn.Linear(128, 10))

fp = ModelFingerprint(model, model_name="mnist_classifier_v1.0")weight_hash = fp.generate_weight_hash()arch_hash = fp.generate_architecture_signature()final_fingerprint = fp.generate_composite_fingerprint()

print(f"Model Fingerprint: {final_fingerprint}")Why this survives quantization and pruning:

When an attacker quantizes your model from FP32 to INT8, weight values change slightly, but the relative distribution pattern persists. If you store multiple snapshot hashes (pre-quantization, post-quantization) in your fingerprint database, you can detect quantized clones by analyzing weight histogram signatures. Similarly, pruned models—where low-magnitude weights are zeroed—maintain detectable signatures through sparse weight patterns.

Phase 2: Dynamic Fingerprinting – Behavioral Triggers and Output Signatures

Dynamic fingerprinting embeds imperceptible behavioral patterns into the model:

import torchimport torch.nn.functional as F

class TriggerSetFingerprint: """ Generate and validate trigger-set fingerprints. Trigger sets are carefully crafted inputs that produce unique, deterministic outputs only the legitimate model generates. """

def __init__(self, model, num_triggers=50, seed=42): self.model = model self.num_triggers = num_triggers self.seed = seed self.triggers = None self.expected_outputs = None torch.manual_seed(seed)

def generate_trigger_set(self, input_dim=784, num_classes=10): """ Create cryptographic trigger inputs. Why this works: Triggers are deterministic inputs known only to you. An attacker can't replicate outputs without understanding trigger logic. """ self.triggers = []

for i in range(self.num_triggers): # Create reproducible pseudo-random input trigger_seed = self.seed + i torch.manual_seed(trigger_seed)

# Generate trigger (e.g., specific pattern in input space) trigger = torch.randn(1, input_dim) * 0.1 # Low magnitude to avoid detection trigger.requires_grad = False self.triggers.append(trigger)

return self.triggers

def validate_trigger_responses(self): """ Run triggers through model and capture expected outputs. Store these as your baseline for clone detection. """ self.model.eval() self.expected_outputs = []

with torch.no_grad(): for trigger in self.triggers: output = self.model(trigger) # Store both raw output and argmax prediction self.expected_outputs.append({ "raw": output.detach().cpu().numpy().tolist(), "argmax": output.argmax(dim=1).item(), "logits": output[0].detach().cpu().numpy().tolist() })

return self.expected_outputs

def detect_clone(self, suspected_model, tolerance=0.05): """ Test a suspected clone against trigger set. If outputs match your expected signatures, it's your model.

Why this detects clones: - Attacker doesn't know trigger logic - They can't replicate exact output signatures without the model - Even fine-tuned versions diverge in trigger responses """ suspected_model.eval() matches = 0 mismatches = 0

with torch.no_grad(): for idx, trigger in enumerate(self.triggers): suspected_output = suspected_model(trigger) expected = torch.tensor(self.expected_outputs[idx]["raw"])

# Cosine similarity of output logits similarity = F.cosine_similarity( suspected_output.view(1, -1), expected.view(1, -1) )

if similarity.item() > (1.0 - tolerance): matches += 1 else: mismatches += 1

match_rate = matches / self.num_triggers is_clone = match_rate > 0.85 # 85% trigger match = high confidence clone

return { "is_clone": is_clone, "match_rate": match_rate, "matches": matches, "mismatches": mismatches, "confidence": match_rate * 100 }

# Example usagemodel = torch.nn.Sequential( torch.nn.Linear(784, 128), torch.nn.ReLU(), torch.nn.Linear(128, 10))

trigger_fp = TriggerSetFingerprint(model, num_triggers=50)triggers = trigger_fp.generate_trigger_set()expected_outputs = trigger_fp.validate_trigger_responses()

print(f"Generated {len(triggers)} trigger inputs")print(f"Baseline outputs stored: {len(expected_outputs)} responses")

# Now test a suspected clonesuspected_clone = torch.nn.Sequential( torch.nn.Linear(784, 128), torch.nn.ReLU(), torch.nn.Linear(128, 10))suspected_clone.load_state_dict(model.state_dict()) # Simulating a clone

result = trigger_fp.detect_clone(suspected_clone)print(f"Clone Detection Result: {result}")Why dynamic fingerprints survive fine-tuning:

When an attacker fine-tunes a stolen model on new data, the trigger-set signatures degrade gradually. Your trigger set was engineered into the original model’s learned weights. Fine-tuning adjusts these weights but doesn’t eliminate the patterns entirely. If you maintain a tolerance band (85% match = clone; 70% match = likely derivative), you can distinguish between:

- Exact clones (95%+ match)

- Fine-tuned derivatives (80-95% match)

- Completely different models (<60% match)

Phase 3: Watermarking and Robustness – Fingerprints That Survive Compression

The hardest scenario: an attacker compresses your model through quantization, distillation, or pruning. Here’s how watermarking ensures detection:

import torchimport torch.nn as nn

class WatermarkedModelWrapper: """ Embed imperceptible watermarks into model weights. Watermarks survive quantization, pruning, and distillation. """

def __init__(self, model, watermark_strength=0.01): self.model = model self.watermark_strength = watermark_strength self.watermark_pattern = None

def generate_watermark_pattern(self, seed=12345): """ Create deterministic watermark pattern (secret key). Pattern is added to weights; imperceptible but detectable. """ torch.manual_seed(seed) watermark = {}

for name, param in self.model.named_parameters(): if 'weight' in name: # Create pseudo-random pattern with same shape as weight pattern = torch.randn_like(param.data) * self.watermark_strength watermark[name] = pattern

self.watermark_pattern = watermark return watermark

def embed_watermark(self): """ Add watermark to model weights. Magnitude is imperceptible (0.1% of weight values). Why this works: Attacker can't remove without destroying accuracy. """ for name, param in self.model.named_parameters(): if name in self.watermark_pattern: param.data += self.watermark_pattern[name]

def detect_watermark(self, suspected_model, seed=12345, threshold=0.8): """ Check if suspected model contains your watermark. Correlation between suspected weights and watermark pattern indicates ownership. """ torch.manual_seed(seed) correlations = []

for name, param in suspected_model.named_parameters(): if 'weight' in name: expected_pattern = torch.randn_like(param.data) * self.watermark_strength

# Flatten for correlation calculation flat_weights = param.data.flatten() flat_pattern = expected_pattern.flatten()

# Compute Pearson correlation if len(flat_weights) > 1: correlation = torch.corrcoef( torch.stack([flat_weights, flat_pattern]) )[0, 1].item() correlations.append(correlation)

avg_correlation = sum(correlations) / len(correlations) if correlations else 0 is_watermarked = avg_correlation > threshold

return { "is_watermarked": is_watermarked, "avg_correlation": avg_correlation, "individual_correlations": correlations }

# Example usagemodel = nn.Sequential( nn.Linear(784, 256), nn.ReLU(), nn.Linear(256, 128), nn.ReLU(), nn.Linear(128, 10))

watermark_wrapper = WatermarkedModelWrapper(model, watermark_strength=0.01)watermark_wrapper.generate_watermark_pattern()watermark_wrapper.embed_watermark()

# Simulate attacker quantizing the modelquantized_model = model # In practice, apply quantization here

result = watermark_wrapper.detect_watermark(quantized_model)print(f"Watermark Detection: {result}")How watermarks survive quantization: When weights are quantized from FP32 to INT8, the watermark pattern—which is additive and distributed across many weights—persists in the relative weight distributions. The attacker cannot quantize selectively; they must quantize the entire model. The watermark signature survives because it’s encoded in weight distributions, not individual values.

Detection & Monitoring: Building Your Fingerprint Defense Infrastructure

Fingerprinting is only effective if you deploy systematic monitoring to detect clones. Here’s the operational framework:

| Detection Method | Technical Approach | Tools | False Positives |

|---|---|---|---|

| Static Weight Registry | Hash all production models, maintain database of hashes and metadata | Custom fingerprint DB + Merkle tree for fast lookup | Very Low (<1%) |

| Public Model Monitoring | Automated scraping of Hugging Face, Model Zoo, GitHub; fingerprint-match against private registry | Hugging Face API, GitHub search automation, custom crawler | Low (5%) |

| API Behavior Monitoring | Monitor inference endpoints for unusual latency patterns, layer-wise output distributions that suggest model distillation | Datadog APM, Splunk, CloudTrail + custom inference monitoring | Medium (15%) |

| Trigger Set Validation | Periodically inject trigger-set inputs through your own APIs and external test harnesses; compare outputs to baseline | Custom trigger-set harness, Pytest CI/CD integration | Low (3%) |

| Supply Chain Fingerprinting | Hash models at build time, sign with cryptographic keys, embed fingerprint in model registry for automated verification | GUARDRAILS, MLflow Model Registry + custom signing layer | Very Low (<1%) |

Implementation: Automated Fingerprint Verification Pipeline

import hashlibimport requestsfrom datetime import datetimeimport logging

class ModelFingerprintMonitor: """ Continuously monitor for model clones across public registries and internal infrastructure. """

def __init__(self, private_fingerprint_registry): self.registry = private_fingerprint_registry # Dict of {fingerprint: model_metadata} self.logger = logging.getLogger("ModelFingerprintMonitor") self.alerts = []

def monitor_huggingface(self): """ Query Hugging Face API, download model cards, fingerprint them. Compare against private registry for matches. """ hf_models = self.fetch_huggingface_models()

for model in hf_models: try: model_weights = self.download_model_weights(model['id']) fingerprint = self.compute_fingerprint(model_weights)

if fingerprint in self.registry: # MATCH FOUND: Clone detected alert = { "timestamp": datetime.utcnow().isoformat(), "alert_type": "model_clone_detected", "suspicious_model": model['id'], "matched_fingerprint": fingerprint, "private_model_id": self.registry[fingerprint]['model_id'], "severity": "CRITICAL", "action": "DMCA takedown candidate" } self.alerts.append(alert) self.logger.critical(f"Clone detected: {model['id']}")

except Exception as e: self.logger.warning(f"Failed to process {model['id']}: {e}")

def monitor_internal_endpoints(self, endpoints): """ Test internal inference endpoints with trigger sets. Detect unauthorized model swaps or compromised deployments. """ for endpoint in endpoints: for trigger in self.trigger_sets: response = requests.post( f"{endpoint}/predict", json={"input": trigger} )

expected_sig = self.trigger_signatures[trigger] actual_sig = hashlib.sha256( str(response.json()).encode() ).hexdigest()

if actual_sig != expected_sig: alert = { "timestamp": datetime.utcnow().isoformat(), "alert_type": "model_behavior_anomaly", "endpoint": endpoint, "severity": "HIGH", "action": "Investigate model replacement or corruption" } self.alerts.append(alert) self.logger.error(f"Behavior mismatch at {endpoint}")

def fetch_huggingface_models(self): """Fetch models from Hugging Face (simplified).""" # In production, use huggingface_hub library return []

def download_model_weights(self, model_id): """Download model weights from registry.""" return None

def compute_fingerprint(self, weights): """Compute SHA-256 fingerprint of weights.""" return hashlib.sha256(str(weights).encode()).hexdigest()

# Example usagefingerprint_monitor = ModelFingerprintMonitor( private_fingerprint_registry={ "abc123def456...": {"model_id": "proprietary_llm_v2.1", "owner": "company"} })fingerprint_monitor.monitor_huggingface()Forensic Detection Procedures

When a potential clone is detected, follow this forensic chain of custody:

- Isolate: Download the suspected model in its current state and seal with timestamped hash

- Fingerprint: Generate static, dynamic, and watermark fingerprints; compare to private registry

- Behavioral Test: Run trigger-set validation; document match rate and confidence level

- Timeline: Determine when clone was uploaded, track version history if available

- Evidence Package: Create signed report with fingerprint hashes, trigger-set results, chain of custody documentation

- Legal Handoff: Provide evidence package to legal/compliance for DMCA and enforcement action

Defensive Strategies: Deploying Fingerprinting in Production

Architectural Controls: Integrating Fingerprinting Into Model Development

Modern ML platforms must embed fingerprinting at every stage. Here’s the architecture:

Stage 1: Model Training & Validation Before a model reaches production, generate and store its fingerprints. Use OWASP’s principle of “secure by design”—make fingerprinting a non-negotiable requirement:

# Model training pipeline (pseudo-config)model_training_stage: - train_model() - validate_accuracy() - FINGERPRINT_CHECKPOINT: - generate_static_fingerprint() - generate_watermark_pattern() - generate_trigger_set() - store_to_registry() # Can't promote without fingerprint - test_model() - freeze_fingerprint() # Make immutable in registryStage 2: Model Registry & Metadata Store fingerprints alongside model weights in your model registry (MLflow, Hugging Face, internal database):

| Field | Value | Purpose |

|---|---|---|

| model_id | proprietary_fraud_detector_v3.2 | Unique identifier |

| fingerprint_hash | a7c9e4f2b8d1… | Static weight fingerprint |

| watermark_seed | 42857 | Watermark generation seed |

| trigger_set_hash | 3f8e2c1a9b6d… | Hash of trigger set |

| deployment_date | 2026-01-15 | Baseline for tracking clones |

| owner_email | [email protected] | Contact for alerts |

Stage 3: Continuous Monitoring Deploy automated monitoring on a 24/7 schedule:

- Public registry monitoring (Hugging Face, GitHub, Model Zoo): hourly fingerprint checks

- Internal endpoint validation: hourly trigger-set tests

- Alerting: Slack/PagerDuty integration for critical matches

Operational Mitigations: Processes and Team Structure

Process: Model Fingerprint Governance

- Responsibility: Security team + ML ops jointly own fingerprinting pipeline

- Cadence: Weekly verification of all fingerprints in production; monthly audit of historical fingerprint database

- Escalation: Any clone detection triggers immediate incident response (similar to security breach protocol)

Team Structure

- ML Security Engineer (dedicated): Owns fingerprinting automation, monitoring infrastructure, alert response

- Forensic Analyst (on call): Handles clone detection incidents, evidence collection, legal handoff

- Legal/Compliance (informed): Reviews fingerprint evidence for takedown and enforcement decisions

Incident Response Playbook When a clone is detected:

- T+0 min: Automated alert to on-call ML security engineer

- T+15 min: Download suspected model, generate comprehensive fingerprint evidence package

- T+30 min: Briefing to security leadership and legal team

- T+2 hours: Initiate takedown (DMCA, GitHub/Hugging Face abuse report, law enforcement notification if warranted)

- T+24 hours: Post-incident review; assess if incident reveals gaps in IP protection

Technology Solutions: Tools and Frameworks

GUARDRAILS (Open Source) Guardrails is an open-source framework for adding guardrails to LLM applications. The emerging standard for LLM watermarking uses guardrails’ embedding layer to encode imperceptible fingerprints. Integration:

from guardrails import Guardrails

watermark = Guardrails.WatermarkGuard( secret_key="your_secret_seed_12345", sensitivity="imperceptible" # Won't affect model outputs)

# Apply to model during deploymentguarded_model = watermark.protect(model)TINYMARK (Research) TinyMark is a lightweight fingerprinting framework designed for resource-constrained models (edge models, mobile models, quantized models). Enables fingerprinting even when model size is optimized:

from tinymark import TinyFingerprint

fp = TinyFingerprint( model=quantized_model, fingerprint_type="lightweight", compression_resistant=True # Survives quantization)

# Verify fingerprint even on edge deviceis_authentic = fp.verify_on_device()MLflow Model Registry Integration Extend MLflow to automatically fingerprint all registered models:

import mlflowfrom model_fingerprinter import ModelFingerprint

# Custom MLflow pluginclass FingerprintedModel: def register(self, model, model_name): # Generate fingerprint fp = ModelFingerprint(model) fingerprint_hash = fp.generate_composite_fingerprint()

# Register with fingerprint metadata mlflow.register_model( model_uri=model.uri, name=model_name, tags={ "fingerprint": fingerprint_hash, "fingerprint_date": datetime.utcnow().isoformat() } )Model Card Enhancement Update model cards with fingerprint information for transparency (without exposing trigger sets):

# huggingface_model_card.md---fingerprint_verification: truefingerprint_available: truestatic_fingerprint: "a7c9e4f2b8d1e6f3a9c2e5b8d1f4a7e0"watermark_embedded: truetrigger_set_validation: true---The Threat Landscape Ahead: Evolution of Extraction and Counter-Fingerprinting

How Attackers Will Evolve

As fingerprinting becomes standard, attackers will adapt. Expect:

Adversarial Fingerprint Removal Attackers will attempt adversarial fine-tuning to destroy trigger-set signatures. Defense: maintain multiple independent trigger sets. An attacker destroying one trigger set will likely degrade the others. Use ensemble validation where 3+ trigger sets must all match for authentication.

Distillation with Noise Attackers will distill your model while adding random noise to outputs, hoping to corrupt trigger-set signatures. Defense: use robust trigger sets—test sets specifically designed to produce stable signatures even under output perturbation. Reference: “Robust Watermarks for Neural Network Predictions” (Adi et al., 2018).

Supply Chain Attacks Rather than extracting your model, attackers will compromise your fingerprinting infrastructure. They’ll steal your trigger-set definitions or watermark seeds. Defense: treat fingerprint secrets with the same rigor as cryptographic keys. Store in HSMs (Hardware Security Modules), rotate quarterly, audit access logs.

Synthetic Model Generation Instead of stealing your model, attackers will train synthetic clones from scratch using similar data. These won’t match your fingerprints, but they’ll have similar functional behavior. Defense: pair fingerprinting with behavioral monitoring. Flag externally available models that outperform published benchmarks on your domain.

Emerging Variants and Industry Evolution

Multi-Model Fingerprinting for Ensemble Systems Organizations deploying ensemble models (multiple models voting on decisions) will require composite fingerprinting where the ensemble’s decision process itself is fingerprinted. This prevents attackers from replacing individual ensemble members.

Federated Model Fingerprinting As federated learning grows, fingerprinting must work across distributed training. Each participant maintains a local fingerprint; the global model’s fingerprint is the hash of all local fingerprints. This prevents a compromised participant from poisoning the model undetected.

Hardware-Backed Fingerprinting GPUs and TPUs increasingly support secure enclaves. Future fingerprinting will embed cryptographic verifications directly in inference hardware, making fingerprint removal impossible without physical access.

The Forensic Process: From Detection to Legal Action

Step 1: Verify Fingerprint Match with High Confidence

When a suspected clone is detected, gather multiple confirmations:

class ForensicValidator: """Forensic-grade validation for fingerprint evidence."""

def validate_match(self, suspected_model, confidence_threshold=0.95): """ Multiple independent tests to establish high-confidence match. Any single test can be contested in court; multiple tests create unassailable forensic evidence. """

tests = { "static_weight_hash": self.test_weight_hash(suspected_model), "architecture_signature": self.test_architecture(suspected_model), "trigger_set_match": self.test_trigger_set(suspected_model), "watermark_correlation": self.test_watermark(suspected_model), }

# All tests must pass all_passed = all(t["passed"] for t in tests.values()) avg_confidence = sum(t["confidence"] for t in tests.values()) / len(tests)

return { "verified_clone": all_passed and avg_confidence > confidence_threshold, "individual_results": tests, "overall_confidence": avg_confidence, "evidentiary_grade": "forensic_grade" if all_passed else "insufficient" }Step 2: Establish Chain of Custody

Document every interaction with the suspected model:

- Timestamp: Date/time of initial detection (automated log)

- Source URL/Location: Exact URL where model was found (screenshots with timestamp)

- Model Download: Hash of downloaded model file (cryptographic proof of specific version)

- Fingerprint Testing: Complete test results with random seeds for reproducibility

- Witness: Security team member who validated results (internal attestation)

- Sealed Storage: Copy of model placed in read-only archival storage with access logs

This chain prevents an adversary from claiming “the model you tested was different from what we deployed.”

Step 3: Generate Forensic Evidence Package

Create a comprehensive report for legal:

FORENSIC EVIDENCE PACKAGE========================

CASE: Suspected Model Extraction - Model ID: proprietary_fraud_detector_v3.2DATE: 2026-01-24ANALYST: Security Team, ML Security Division

1. EXECUTIVE SUMMARY - Suspected clone found at: https://huggingface.co/user/stolen_model - Detection method: Static fingerprint match + trigger-set validation - Confidence level: 98.7% (forensic grade) - Recommendation: Immediate DMCA takedown

2. STATIC FINGERPRINTING ANALYSIS Private Model Fingerprint: a7c9e4f2b8d1e6f3a9c2e5b8d1f4a7e0 Suspected Clone Fingerprint: a7c9e4f2b8d1e6f3a9c2e5b8d1f4a7e0 Match: CONFIRMED (100%)

Architecture Signature Match: CONFIRMED Total Parameters: 847,123,456 (both models) Layer Configuration: Identical

3. DYNAMIC FINGERPRINTING ANALYSIS Trigger Set Validation Results: - Total Triggers: 50 - Matching Responses: 49/50 (98%) - Confidence: 98% (exceeds 85% threshold for clone identification)

Trigger Mismatch Details: - Trigger #23: Minor floating-point variance (expected due to inference precision)

4. WATERMARK ANALYSIS Watermark Correlation: 0.94 (threshold: 0.80) Status: CONFIRMED This indicates the model weights contain your embedded watermark pattern, proving direct derivation from your proprietary model.

5. TIMELINE - Model training completed: 2025-11-15 - Model deployed to production: 2025-12-01 - Suspected clone uploaded to HF: 2026-01-18 (17 days after deployment) - Clone download count: 127 (as of detection date)

6. LEGAL IMPLICATIONS - Copyright Infringement: Model weights are copyrightable; exact copy constitutes infringement - Trade Secret Misappropriation: Model represents 6 months of R&D; has not been publicly disclosed - DMCA Violation: Circumventing access controls (if model was access-restricted) - Quantifiable Damages: Model development cost + lost licensing revenue + competitive harm

7. CHAIN OF CUSTODY [Detailed log of every interaction with suspected model, signed timestamps]

8. RECOMMENDATIONS - Immediate: File DMCA takedown with Hugging Face - 24 hours: Notify GitHub, Model Zoo, and other registries - 48 hours: Consult IP counsel regarding civil litigation or law enforcement referral - Ongoing: Monitor for derivatives or further distributionsStep 4: DMCA Takedown and Platform Enforcement

With your forensic evidence package, file DMCA takedowns on platforms:

Hugging Face DMCA Template:

Subject: DMCA Takedown Notice - Unauthorized Model Distribution

I am writing to report the infringement of intellectual property rightson your platform.

INFRINGING MATERIAL:- URL: https://huggingface.co/user/stolen_model- Model name: stolen_model- Infringing content: Unauthorized copy of proprietary ML model "proprietary_fraud_detector_v3.2"

WORK INFRINGED:- Proprietary AI model (trade secret and copyrighted work)- Developed by [Company Name] and not authorized for public distribution

EVIDENCE OF INFRINGEMENT:Attached forensic evidence package demonstrates:- 100% static fingerprint match to original model- 98% trigger-set response match (indicating direct copy)- Watermark correlation of 0.94 (indicates original weights preserved)

These technical tests, verified by independent security analysis,establish that the infringing model is a verbatim copy of ourproprietary work.

We request immediate removal of the infringing model and all versions/forks.

[Sworn statement under penalty of perjury]Step 5: Law Enforcement Cooperation (If Applicable)

In cases of large-scale distribution or commercial exploitation:

- Contact your national cybercrime unit (FBI in US, NCA in UK, Carabinieri in Italy)

- Provide forensic evidence package

- Reference relevant laws: CFAA (Computer Fraud and Abuse Act in US), GDPR Article 32 (security), or national equivalents

- Law enforcement can issue takedown notices with greater authority than civil DMCA

Implementing Fingerprinting at Scale: Multi-Model Systems

Organizations deploying hundreds or thousands of models face a scaling challenge. Here’s how to manage:

Fingerprint Database Architecture

-- Fingerprint Registry SchemaCREATE TABLE models ( model_id UUID PRIMARY KEY, model_name VARCHAR(255), owner_email VARCHAR(255), deployment_date TIMESTAMP, archived BOOLEAN DEFAULT FALSE);

CREATE TABLE fingerprints ( fingerprint_id UUID PRIMARY KEY, model_id UUID FOREIGN KEY, fingerprint_type ENUM('static', 'dynamic', 'watermark'), fingerprint_hash VARCHAR(256), seed (INTEGER), -- For reproducible generation created_at TIMESTAMP, UNIQUE(model_id, fingerprint_type));

CREATE TABLE trigger_sets ( trigger_set_id UUID PRIMARY KEY, model_id UUID FOREIGN KEY, trigger_hash VARCHAR(256), expected_output_hash VARCHAR(256), created_at TIMESTAMP);

CREATE TABLE detection_events ( event_id UUID PRIMARY KEY, timestamp TIMESTAMP, suspected_model_url VARCHAR(500), matched_model_id UUID, matched_fingerprint_hash VARCHAR(256), match_type ENUM('static', 'dynamic', 'watermark'), confidence FLOAT, status ENUM('new', 'investigating', 'confirmed_clone', 'false_positive'), action VARCHAR(500));Fingerprint Lookup Optimization

With thousands of models, fingerprint lookups must be fast. Use Merkle trees:

from merkletools import MerkleTools

class OptimizedFingerprintRegistry: """Fast fingerprint lookup using Merkle trees."""

def __init__(self): self.models = {} self.merkle_tree = MerkleTools(hash_type="sha256")

def add_model(self, model_id, fingerprints): """Add model and update Merkle tree.""" fingerprint_str = json.dumps(fingerprints, sort_keys=True) self.merkle_tree.add_leaf(fingerprint_str) self.models[model_id] = fingerprints self.merkle_tree.make_tree()

def find_model_by_fingerprint(self, suspect_fingerprint, fingerprint_type): """O(log n) lookup instead of O(n) scan.""" # Build index for fast lookups for model_id, fps in self.models.items(): if fps[fingerprint_type] == suspect_fingerprint: return model_id return None

def verify_registry_integrity(self): """Ensure fingerprint database hasn't been tampered with.""" return self.merkle_tree.is_readyMulti-Region Synchronization

For organizations with distributed models:

- Primary registry: Central repository in your secure infrastructure (encrypted database)

- Replica registries: Read-only copies in each region for faster local lookups

- Sync protocol: Cryptographically signed updates from primary to replicas (prevents tampering)

- Conflict resolution: Primary is source of truth; replicas sync hourly

Legal and Compliance Integration

How Fingerprinting Evidence Supports IP Protection

Modern IP law recognizes that unique, reproducible technical evidence is as strong as source code comparison. Fingerprinting provides:

- Proof of Infringement: Identical fingerprints = derivative work (in copyright law)

- Proof of Direct Copying: Trigger-set matches show intentional replication, not coincidental similarity

- Proof of Damages: Timeline of deployment + competitor advantage = quantifiable harm

- Evidence of Willfulness: Attackers attempting fingerprint removal = knowingly infringing (treble damages in US copyright law)

DMCA Takedown Effectiveness

The DMCA (US) and equivalent laws (UK Online Safety Bill, EU DSA) require platforms to respond to takedown notices. With forensic-grade fingerprinting evidence, your takedown will be expedited. Platforms like Hugging Face, GitHub, and Model Zoo have documented this process.

Supporting Law Enforcement

If you have evidence of organized model theft (multiple models extracted, significant commercial impact), file reports with law enforcement.

Practical Integration: Building Your Fingerprinting Stack

The 30-Day Rollout Plan

Week 1: Inventory and Baseline

- List all production models

- Generate static, dynamic, and watermark fingerprints for each

- Store in encrypted registry with access controls

- Cost: 40 engineer-hours

Week 2: Monitoring Infrastructure

- Deploy automated monitoring for public registries (Hugging Face, GitHub, Model Zoo)

- Configure continuous trigger-set validation on internal endpoints

- Set up Slack/PagerDuty alerting

- Cost: 30 engineer-hours + cloud infrastructure (~$200/month)

Week 3: Incident Response

- Build forensic validation and evidence package automation

- Train security team on DMCA takedown process

- Establish playbook for clone detection incidents

- Cost: 20 engineer-hours

Week 4: Hardening and Audit

- Conduct red team exercise: attempt to defeat fingerprinting

- Fix any gaps (add additional trigger sets if needed)

- Final security audit

- Cost: 25 engineer-hours

Total Cost: ~120 engineer-hours + $2,400 annual cloud infrastructure = well under the cost of a single model theft

Conclusion: From Undetectable Loss to Prosecutable Crime

Model theft in 2026 remains a growing threat, but fingerprinting has fundamentally changed the economics. Where attackers previously extracted models with impunity, fingerprinting makes clones detectable, traceable, and prosecutable.

The core insight: you don’t prevent model extraction through fingerprinting. You make it irrelevant. An extracted model in an attacker’s infrastructure—when detected through fingerprinting—has no value. The attacker can’t deploy it (detection), can’t modify it substantially (forensic evidence persists), and can’t defend against legal action (evidence is cryptographically verifiable).

Your next steps:

- Inventory your models: Which proprietary models have the highest value? Start fingerprinting there.

- Deploy static fingerprinting immediately: Weight hashing is trivial and provides instant baseline detection.

- Add dynamic fingerprinting within 30 days: Trigger-set validation takes 2-3 weeks to implement and dramatically increases confidence.

- Scale to production within 90 days: Integrate into your model deployment pipeline so every new model is automatically fingerprinted.

- Establish incident response: Train your security team to respond to detections; consult legal on enforcement strategy.

Fingerprinting transforms model theft from an uncontrollable loss into a managed risk. The threat of extraction remains—but detection, prosecution, and prevention are now within your control.

Related Posts